Business challenge

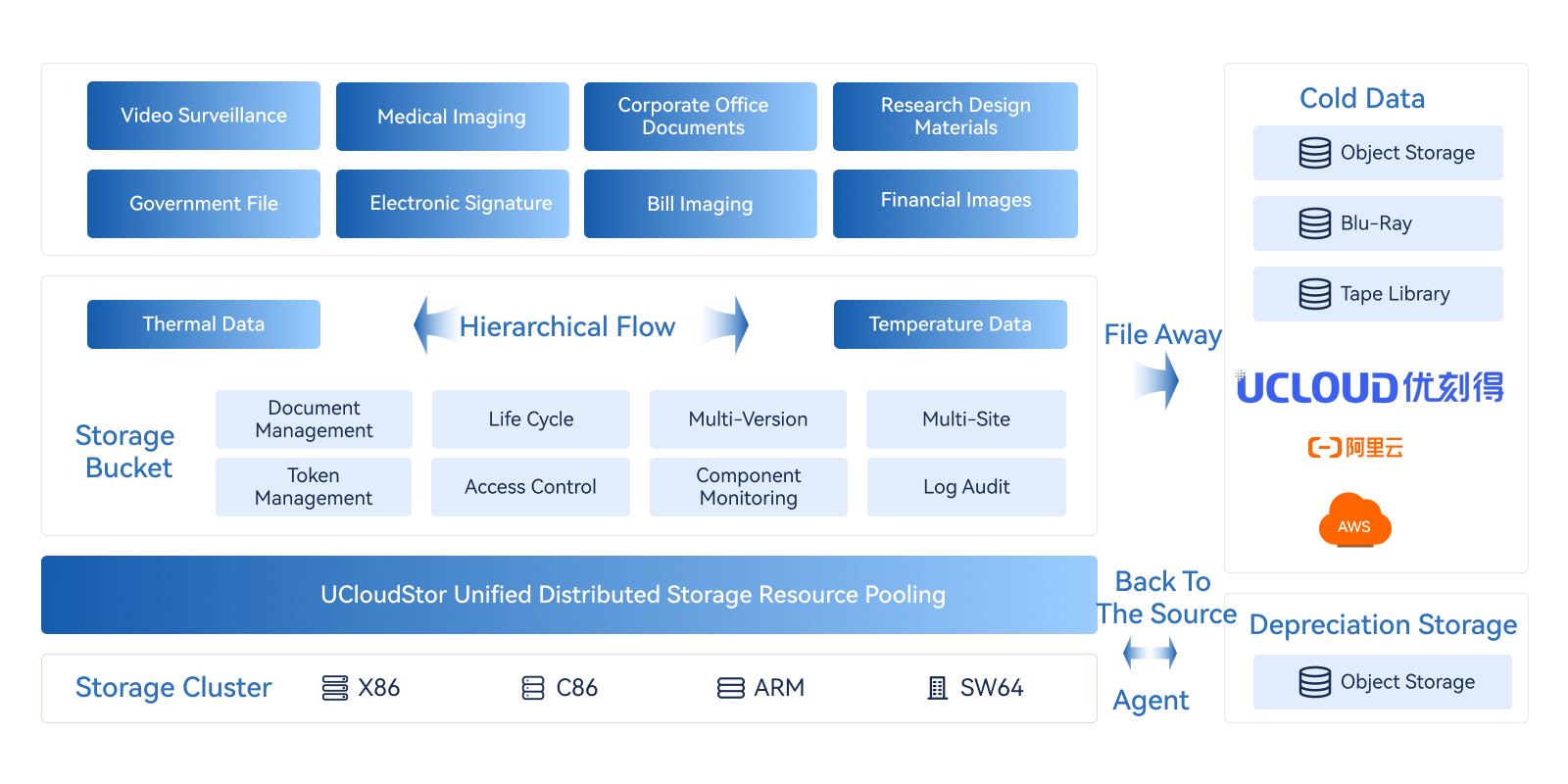

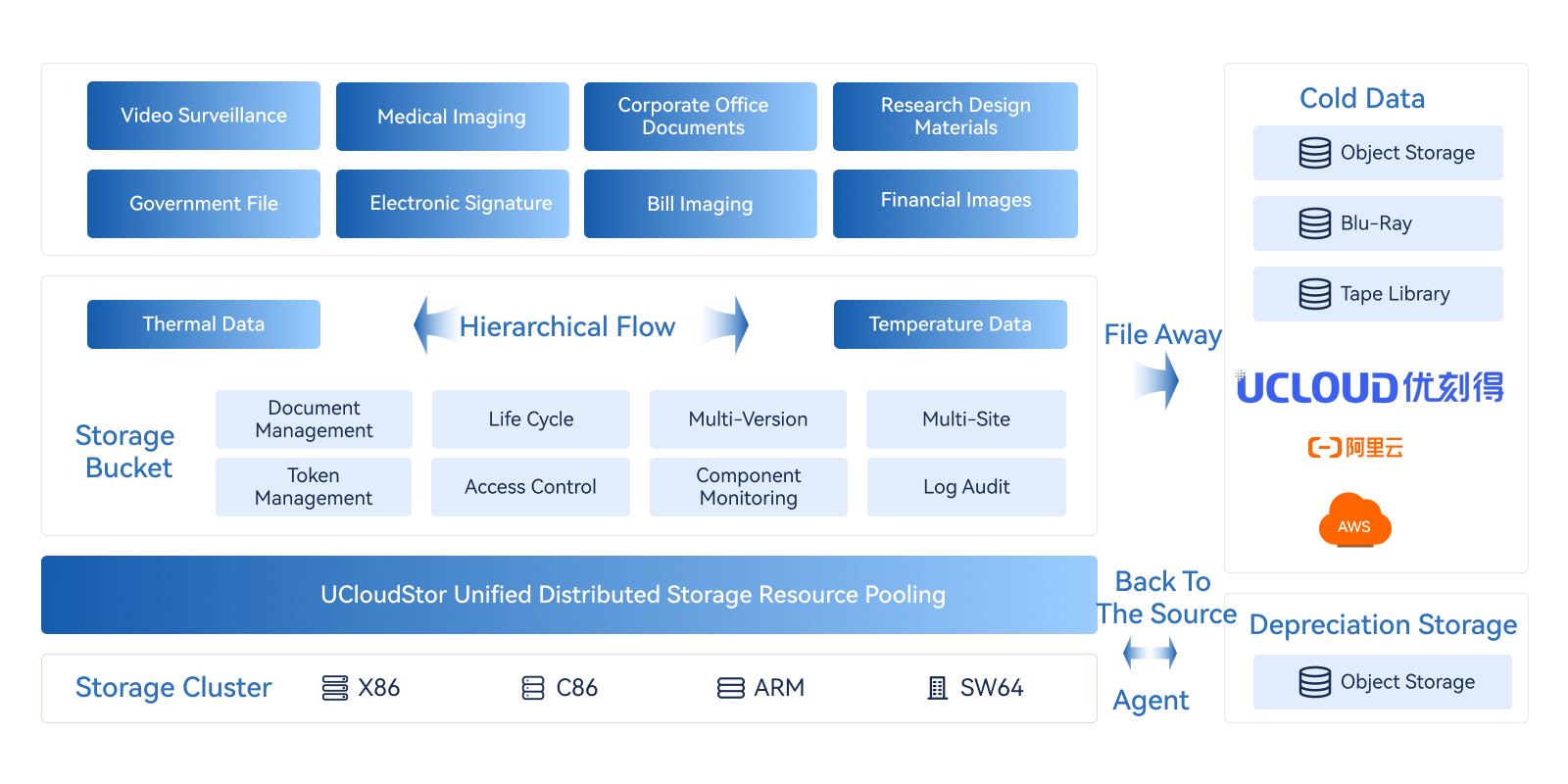

Solution architecture

In the era of big Data, enterprises face the challenge of processing and storing large amounts of unstructured data. The massive data archiving solution based on the standard S3 object storage service supports lifecycle management of data

With the rapid growth and long-term storage of massive data, traditional storage is difficult to cope with due to scalability limitations

The diversification of unstructured data makes it difficult to realize the flow reuse and unified management between different applications

Diverse and massive data, with different access requirements and life cycles, is difficult to automate management

Multi-site synchronization implements data center-level disaster recovery to improve service continuity

Planning the performance and capacity of multiple clusters meets the requirements of various service scenarios

Support multiple protocols to access the same data, facilitating data flow and reuse

Automated data migration, archiving and backsourcing agents simplify enterprise data operation and maintenance

Fine-grained policies are used to control data in a specified range to synchronize data from multiple sites, improving data reliability. The multilevel fault domain mechanism ensures service data availability while meeting data compliance and security requirements

Storage clusters that use the full flash or cache solution meet high-performance read and write requirements, and combine low-cost large-capacity storage clusters with data lifecycle management capabilities to store and archive massive data at low cost

By defining life cycle rules, you can automatically migrate and archive data, change storage categories, and delete data based on data features and requirements to optimize storage resource utilization and data management efficiency